In high-volume customer support environments, maintaining consistent interaction quality is a constant challenge. Support managers and supervisors often handle thousands of cases and conversations every month, making manual quality reviews slow, subjective, and limited in coverage. As a result, recurring issues such as incomplete resolutions, poor communication, or non-compliance with service standards often go unnoticed until customer satisfaction drops.

The Quality Evaluation Agent addresses this challenge by introducing an AI-driven, standardized approach to quality monitoring. It automatically evaluates customer support cases and conversations against predefined criteria, assigns quality scores, and delivers actionable insights, helping teams improve service quality proactively and at scale.

What Is the Quality Evaluation Agent?

The Quality Evaluation Agent is an AI-powered quality management capability designed to evaluate customer support interactions consistently and objectively. By using structured evaluation criteria and automated evaluation plans, it helps organizations monitor interaction quality, identify gaps, and guide coaching efforts without relying on time-intensive manual reviews.

This makes it especially valuable for:

- Customer support managers

- Quality assurance (QA) teams

- Contact center supervisors

- CX leaders focused on service consistency

How the Quality Evaluation Framework Works

The Quality Evaluation Agent operates using a comprehensive framework that consists of evaluation criteria, evaluation plans, and evaluations. Each component plays a critical role in ensuring accurate and consistent quality assessments.

Evaluation Criteria

Evaluation criteria act as the foundation of the quality assessment process. Supervisors create structured forms that include:

- Clear evaluation questions

- Multiple-choice or scaled answer options

- Defined scoring metrics

- Detailed instructions for how responses should be interpreted

The AI agent relies on these criteria to objectively assess customer interactions and assign scores based on compliance with defined standards.

Evaluation Plan

Evaluation plans determine when, how, and which interactions are reviewed. Supervisors can configure plans to:

- Schedule automated evaluations

- Select interactions based on specific conditions

- Apply the appropriate evaluation criteria

- Request evaluations on demand

- Perform bulk evaluations to save time

This systematic approach ensures consistent quality reviews while minimizing manual effort.

Evaluations

Once triggered, the Quality Evaluation Agent reviews cases and closed conversations and provides:

- Interaction summaries

- Quality scores

- Actionable insights

- Practical recommendations for improvement

These evaluations help supervisors proactively address gaps and improve future customer interactions.

Prerequisites for Setup

Before enabling the Quality Evaluation Agent, ensure the following requirements are met:

- Appropriate roles are assigned (Quality Administrator, Quality Manager, Quality Evaluator)

- Connection references are properly configured

- Microsoft Copilot credits are available

- Consent is provided for potential cross-region data movement

How to Enable the Quality Evaluation Agent

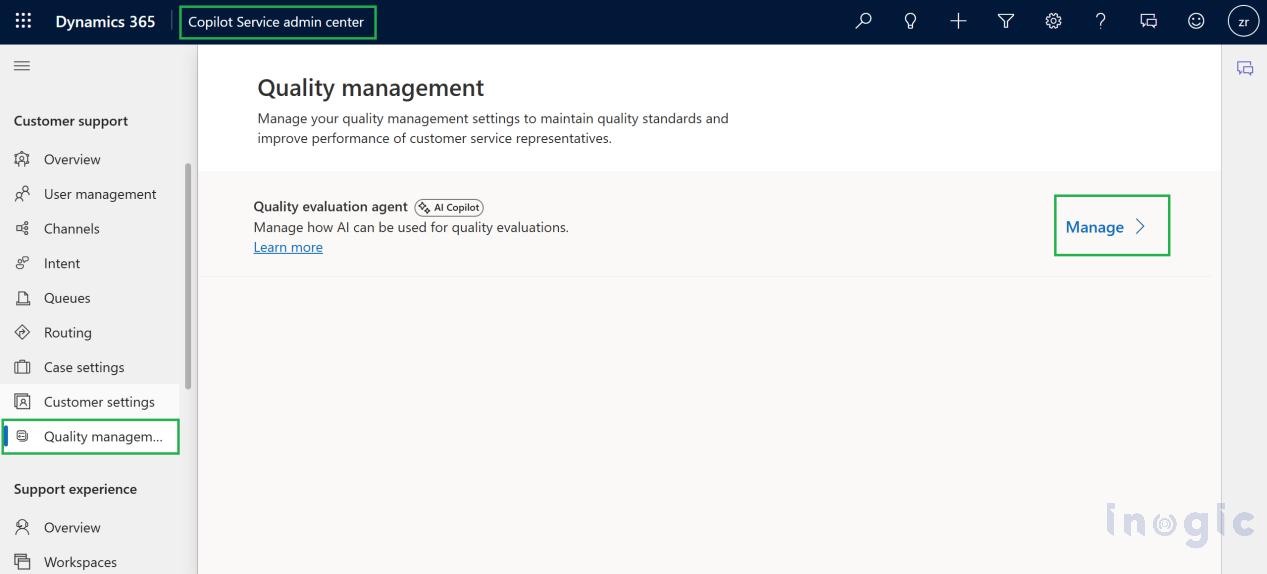

Supervisors can enable the Quality Evaluation Agent through the Copilot Service admin center by navigating to Customer Support > Quality Management.

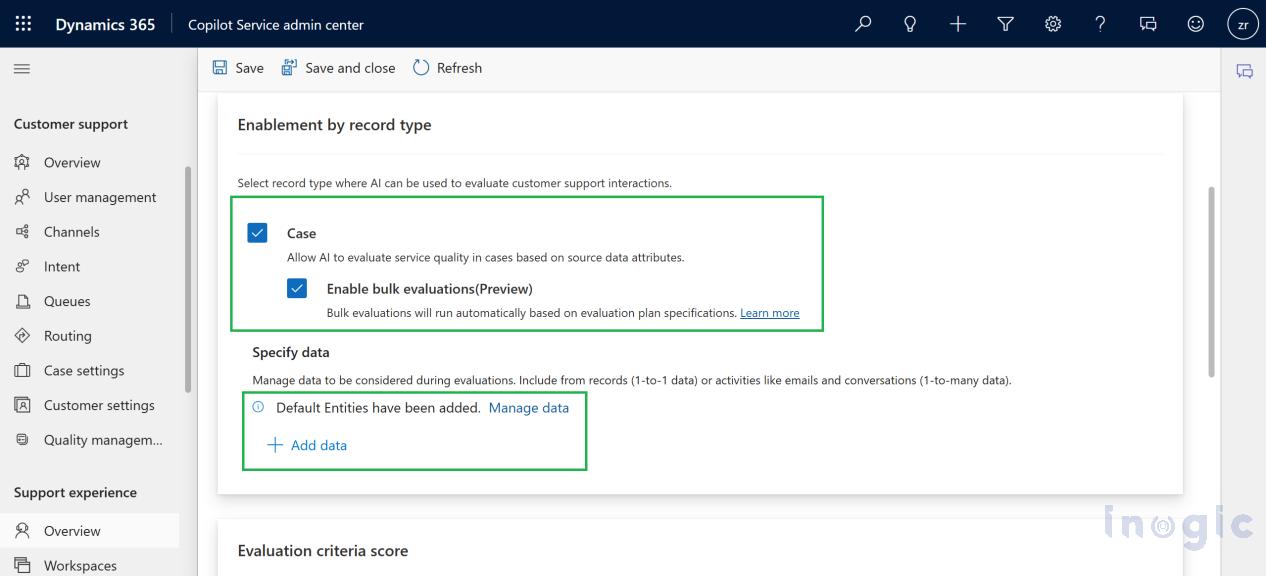

Key configuration steps include:

- Selecting the record type (Case)

- Enabling bulk evaluations for cases

- Managing input data sources used for evaluation

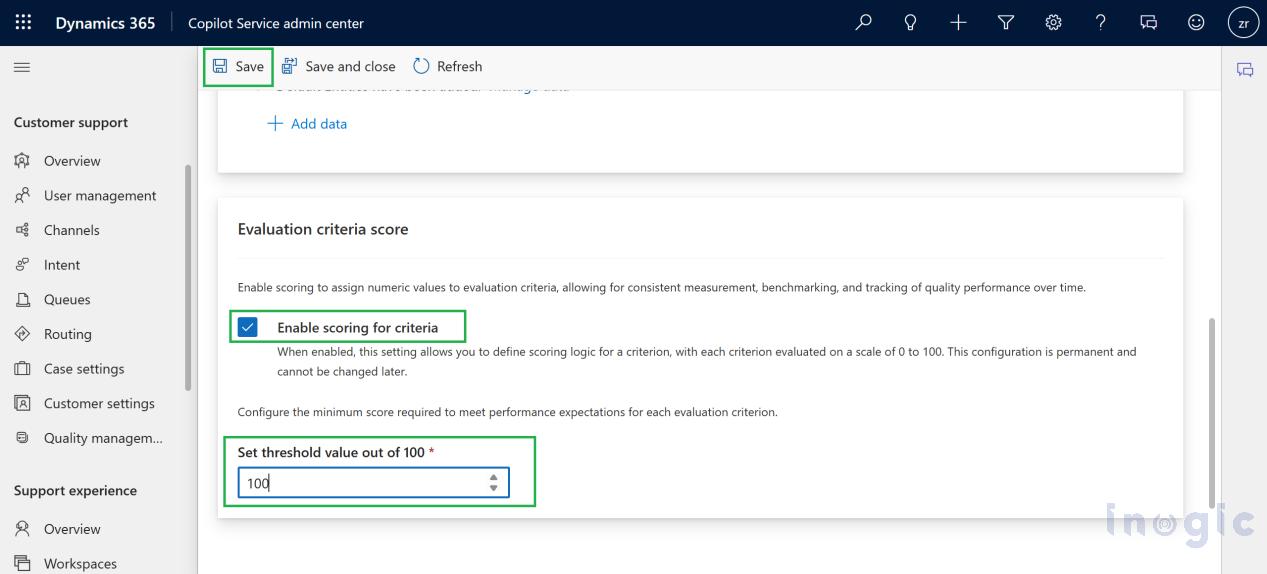

- Enabling scoring for evaluation criteria (once enabled, this setting cannot be disabled)

- Setting threshold values out of 100 to define acceptable quality standards

Each evaluation question and criterion is scored on a scale of 0 to 100, making it easy to identify strong and weak interactions.

Test Case Content for Quality Evaluation Agent

By default, two out-of-the-box evaluation criteria are available:

- Closed Conversation Default Criteria

- Support Quality

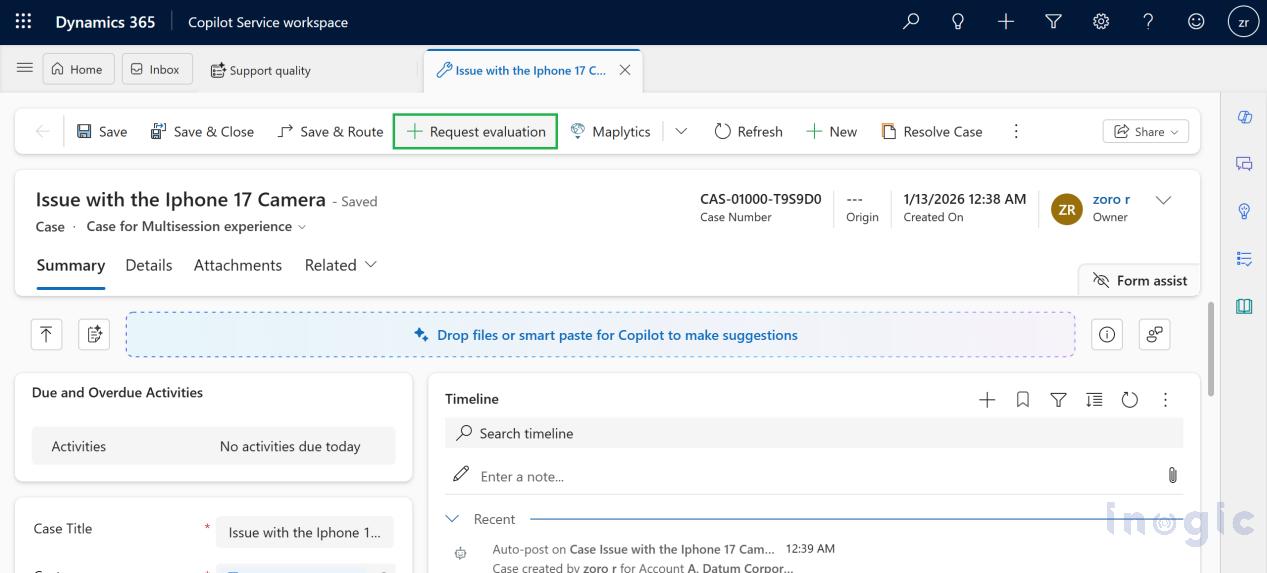

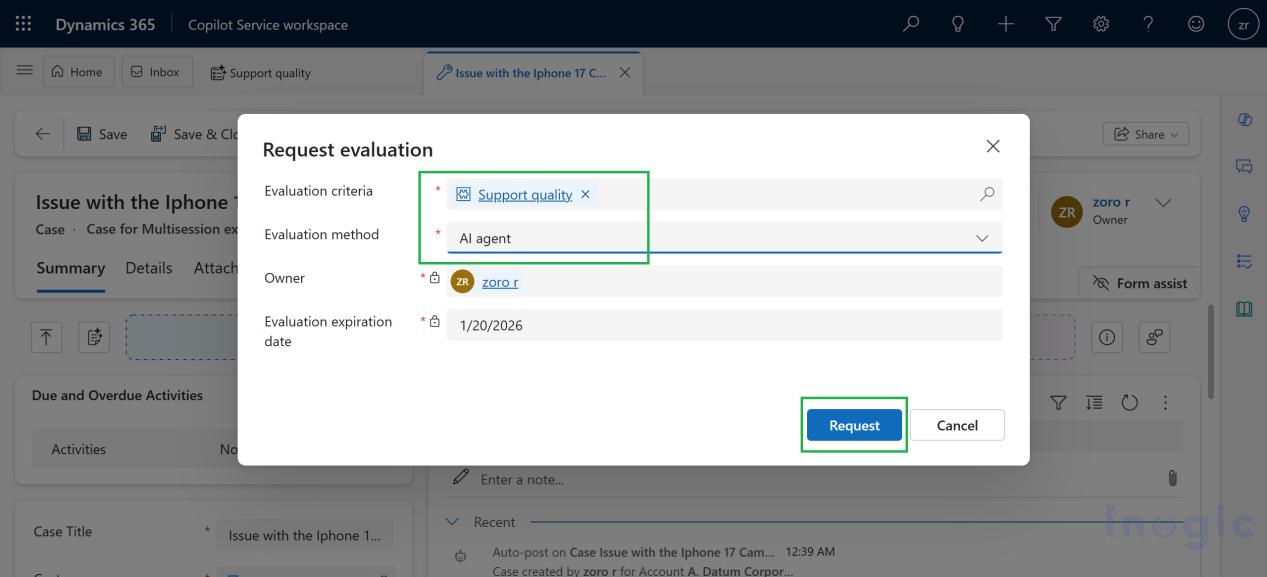

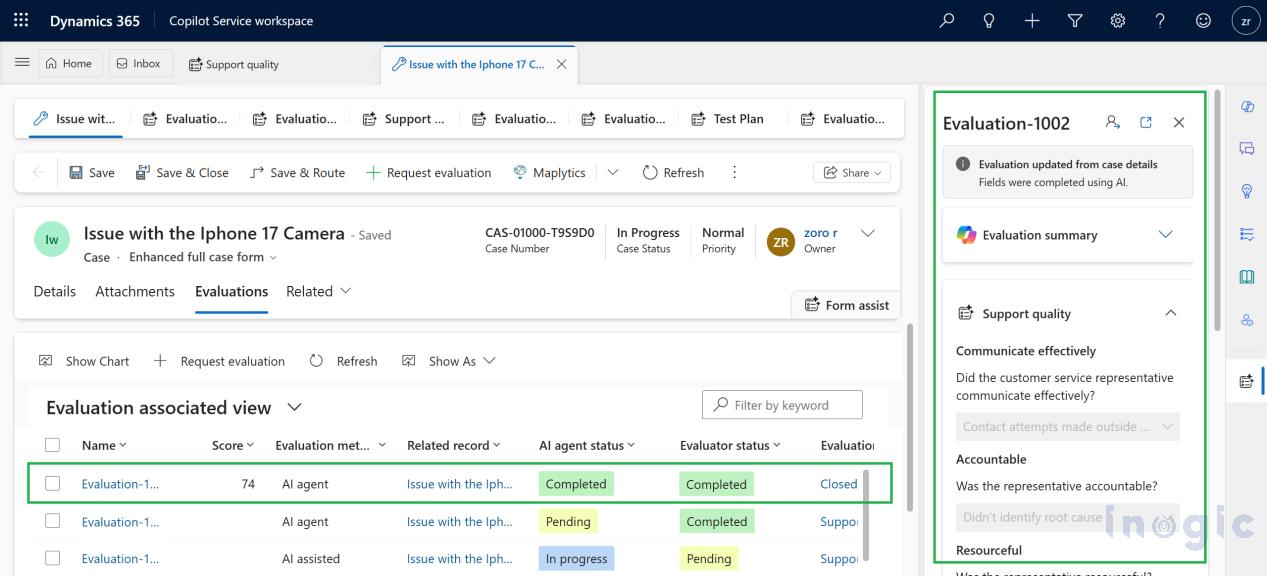

To test the Quality Evaluation Agent, a case record is created and the evaluation is triggered directly from the command bar.

During the evaluation request:

- Support Quality is selected as the evaluation criteria

- AI Agent is chosen as the evaluation method

Once initiated, the AI agent completes the evaluation automatically, generates the quality score, and displays the interaction summary along with detailed criteria results in the Evaluation pane on the right-hand side.

Frequently Asked Questions (FAQ)

What types of interactions does the Quality Evaluation Agent review?

The agent evaluates customer support cases and closed conversations, including interaction summaries and associated communication data.

Is the evaluation process fully automated?

Yes. Evaluations are AI-driven and automated based on predefined criteria and plans, while still allowing supervisors to review results and take action.

How does this improve customer satisfaction?

By identifying quality gaps early and providing actionable recommendations, the agent enables proactive coaching and consistent service delivery, leading to better customer experiences.

Conclusion

The Quality Evaluation Agent modernizes customer interaction reviews by replacing manual, inconsistent assessments with AI-driven, standardized evaluations. By delivering clear quality scores, actionable insights, and practical recommendations, it empowers support teams to improve performance continuously, streamline coaching efforts, and deliver more consistent customer experiences at scale and with confidence.